Call for Papers

For ECCV 2024, we invite paper submissions focused on Multimodal Agents (MMAs), a dynamic field dedicated to creating systems that generate effective actions in various environments by interpreting multimodal sensory inputs. The rise of Large Language Models (LLMs) and Vision-Language Models (VLMs) has led to significant advancements in this area, impacting a broad spectrum from foundational research to practical applications. Our workshop will delve into how these developments integrate with traditional domain-specific technologies, such as visual question answering and vision-language navigation. We are especially interested in contributions that explore:

- Embodied Systems: The usage of MMAs in embodied systems, both physical and virtual.

- Interactive Agents: The creation of multimodal agents that can interact with humans.

- Agent Infrastructure: Infrastructure designed to improve the ability to create MMAs or improve the capabilities of MMAs.

- Applications: The application of MMAs in diverse research areas including, but not limited to, traditional multimodal understanding tasks, robotics, and gaming.

Submissions should address common interests in these fields, such as data collection, benchmarking, and ethical considerations. This workshop aims to serve as a forum for sharing comprehensive insights and discussing the shared challenges in the field of Multimodal Agents, ultimately contributing to the foundational understanding and further advancement of this new, exciting research area.

We will use this CMT3 submission page for all workshop submissions. All submissions will be due by Sunday July 28th 11:59pm Pacific Time. Authors should strictly adhere to the official ECCV 2024 guidelines and use the ECCV 2024 formatting template for submissions. Papers are limited to 14 pages. In addition to full workshop papers, we also accept extended abstracts via the same submission link. You may use either the ECCV 2024 guidelines or CVPR 2024 guidelines for extended abstract submissions (4 pages maximum).

Important Dates

- Updated submission deadline: July 28th 11:59pm Pacific Time

- Author Notification: August 15th (Aoe)

- Camera-ready Deadline:

August 22ndAugust 26th (AoE)

Workshop Materials

Download links will be available

Timetable Schedule

| Time Slot | Speaker(s) | Details |

|---|---|---|

| 14:00 - 14:20 | Juan Carlos Niebles | Opening Remarks |

| 14:20 - 14:55 | Karl Pertsch | Vision-Language-Action Models for Learning Robot Policies at Scale |

| 14:55 - 15:30 | Qing Li | Multimodal Generalist Agents |

| 15:30 - 16:00 | Coffee Break | |

| 16:00 - 16:20 | Lightning Talks | |

| 16:20 - 17:00 | Poster session | |

| 17:00 - 17:20 | Tiffany Min | Situated Instruction Following and Multimodal Reasoning |

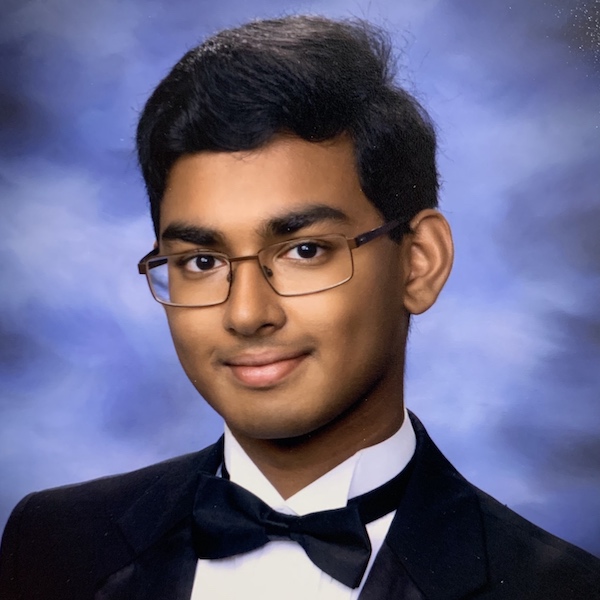

| 17:20 - 17:40 | Zane Durante | Closing Remarks |

Invited Speakers

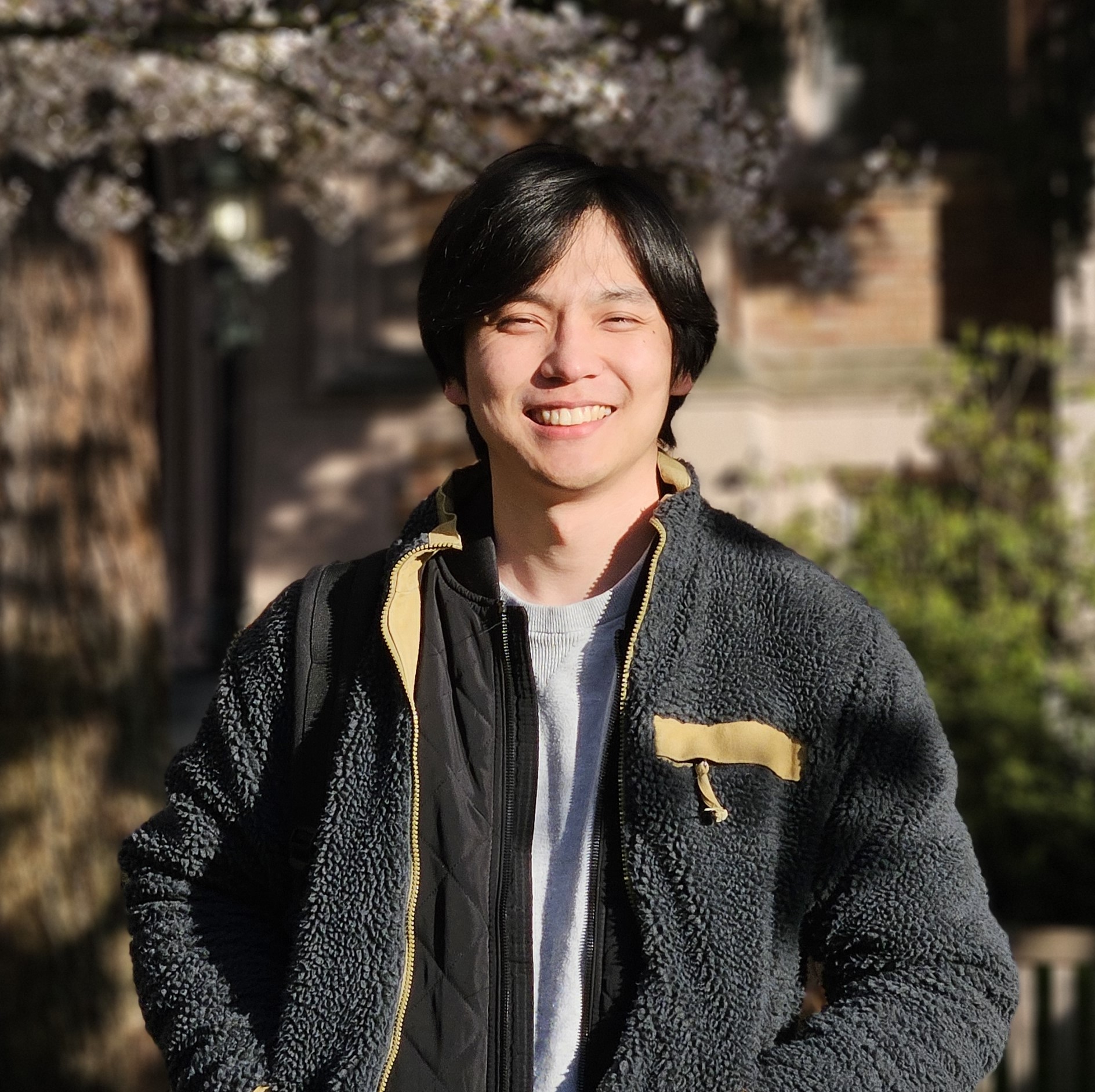

Our invited speaker list will be updated as we confirm additional speakers and approach the conference date.Organizers

Sponsors and Organizations